Discover why large organisations opt for Data Mesh and what the build involves

Data Mesh empowers business units to deliver data outputs at pace

A Data Mesh architecture is a new way for large organisations to manage large amounts of data across teams

My first thought when I found out a client wanted to build a Data Mesh to manage their data was “How exciting! We will get to build an often talked about and rarely built data architectural style”. As the project unfolded, my enthusiasm did not wane.

As you can imagine, being one of the first to successfully build a Data Mesh involved a lot of learning and sometimes painful discoveries to overcome. First off though, in this blog I will talk about what issues the client wanted to overcome in opting for a Data Mesh architecture. After that I will cover off those “gotchas” because they are worth looking out for when embarking on a build.

Why did the client choose a Data Mesh architecture?

The client was a large banking organisation and their issues apply to large enterprises in any industry looking to do analytics at scale. The client was pushing to expand their digital capabilities in response to market dynamics and evolving regulatory reporting requirements.

It was in fact the regulatory requirements that drove the client’s desire to change their data platform paradigm. They needed to significantly improve their reporting across several different business areas. To deliver these numerous data projects they needed to execute projects in parallel. And this is where a Data Mesh can offer some real benefits.

With a Data Mesh all business units can get their own “data” node within the mesh (the traditional way has data stored in one location which can create a bottleneck). This allows business units to run to their own timelines and not be held back by a central team capacity. Also, nodes can be built with various capabilities and technologies which provides a lot of options for business units to innovate within the nodes.

The key take way – a Data Mesh allows for flexibility and self-governance of projects for large organisations looking to deliver data outcomes at pace. The diagram below sets out the business benefits.

What exactly is a Data Mesh?

The purpose of this blog is to focus on learnings and real-world design decisions with a Data Mesh, not the underlying concepts. To learn more about the architecture, I suggest you read up on Data Mesh.

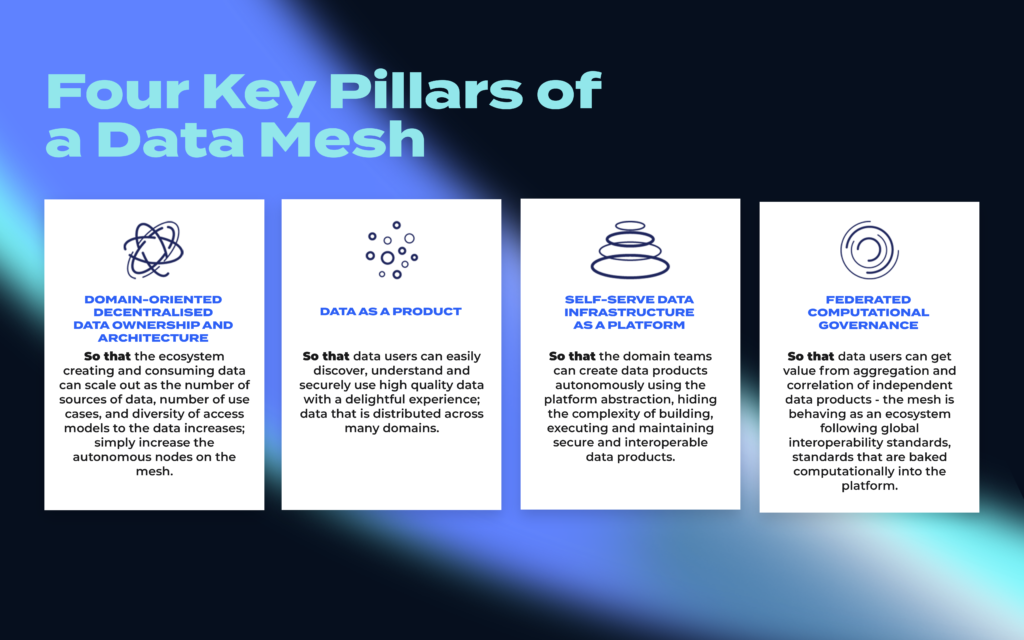

In a nutshell though, there are four key pillars of a Data Mesh. As you can see from the image below, they all have a focus on environment automation.

Building Nodes and a Datahub integral to the build of a Data Mesh

To build a Data Mesh, we needed to align the concept of a Node (for context a Node or Data Landing Zone is a templated configuration that is provisioned through automation to meet the specific requirements of a client/BU’s workload) with the principle of federated computational governance which is provisioned via automation (Terraform templates) and inherits all previously defined Node standards and policies.

Nodes support the principle of Data as a Product, and multiple Nodes can be chained together to build Composite Data Products. Data products need to support discovery and sharing so they can empower internal consumers.

Based on these concepts, our first task was to get started on the automation to provision multiple Data Mesh Nodes. This can be a mammoth task.

However, the good news for us was we had a good foundation to start with. LAB3 already had an offering focused on automation-first data platform acceleration called Modern Data Platform. This put us a long way ahead in terms of the build.

The need for a Control Plane for a heavily regulated organisation

Having to build a Data Mesh within the constraints of a heavily compliant industry presented its own set of challenges. This is where the control plane came into play and is why we built this in.

Nodes publish governance data back to a central control plane allowing for centralised governance and discovery. This also allows domain Nodes to use any technology as long as the various API’s for centralised governance are made available in the Node.

The API contract includes requirements to publish information relating to data quality metrics, metadata and data lineage.

Externally facing APIs also allow for the publishing of the data to specialised tools such as Alex, CluedIn, Purview for lineage or Profisee.

Further, beyond the technology and its key concepts, for the build we also needed to make changes to the processes that are run to deliver projects and then operate the solution.

The “gotchas” to consider before launching into a build

How Granular Should a Node be?

Nodes in the Data Mesh can represent a number of business units, projects or areas of knowledge (i.e customers). When designing the Data Mesh we had to make decisions on the number of nodes we would be creating and in turn supporting.

A number of factors came into play. With the client in question, we had complexity associated to the network design. Specifically, because we decided to have a subscription per node and we also needed to have VNet peering between them, on this basis the number of networking connections exponentially grew as we increased the number of nodes.

Specifically at 8 nodes it would need 38 VNet peering configuration (8×8+2) but at 10 it would be 102 creating a configuration and maintenance nightmare.

We landed on:

- A node per business area

- Multiple data products created for each project or sub-unit within this node.

This ensures that the business units can operate independently while still maintaining a level of supportability.

Managing Data Products and Polyglots

Data products are a key tenet of the Data Mesh philosophy, it allows teams to go at their own speed and helps ensure shared data is vetted and of sufficient quality. However, that paradigm has challenges as well. Specifically:

- How do node owners ensure that source system data (like GL accounts) can only be accessed through the data products of that data domain?

- What types of permissions should be granted and be who to the data products? How does the access lifecycle get managed?

- How do data products get published and in what format?

The challenge as it stands today is that the technology control tools (think data governance tools or access control tools) are only just catching up to the Data Mesh architectures. This is in turn can lead to challenges finding tools that meet the Data Mesh brief.

Also, data products need to speak a common data language so that the products can be catalogued and published using common formats. However not all data professionals use similar data technologies. For example, you can store and share in a Delta Lake format or JSON or flat files. Each format has pros and cons and varying levels of support in the tools that will manage the products. We landed on Delta format, but I think in the future we will need to define sharing format standards based on data type (i.e. structured, unstructured, pictures, documents etc).

Data Mesh means more than modernising technology

Data Mesh is of course, a deeply technological concept however it also requires a cultural and organisational change.

Specifically, the ways of working change when business units/domains gain greater control over their data outcomes.

For example, business stakeholders can build capabilities to execute without involving IT teams, but they also need to think wholistically about team composition, skill sets, and select the right tools to match them.

Tooling can be code oriented (like Python workbooks) or something visual like Azure Data Factory or Synapse Pipelines. Frameworks also such as DBT can standardise data transformation across multiple technologies.

Our takeaway, pay special attention to stakeholders, current skillset and tailor node technologies to match them.

Linkedin

Linkedin

Twitter

Twitter

Facebook

Facebook